Blinking LEDs

Looking back on my college days, it might seem surprising that I didn’t own an Arduino until my senior year, when I needed it for my final projects. After all, I was studying mechatronics, and Arduino is often where most people in this field begin. So, why the delay? I challenged myself by diving directly into working with microcontrollers. However, as the end of college approached, I realized that time to explore and experiment becomes a rare luxury. Curious about what I mean? Let me take you on a brief journey from those early days of blinking LEDs with microcontrollers to discovering the world of Edge AI and teaching my code to “see the world” through Computer Vision. Join me on this adventure!

I fell in love with embedded systems during my sophomore year of college. Imagine a toy that can move, make sounds, or light up—all controlled by a tiny computer inside, called an embedded system. It’s like the toy’s brain, telling it what to do. My first step into this world was learning how to use microcontrollers, which you can picture as very small computers with limited resources, designed to perform specific tasks. No, you can’t play solitaire or check the weather on them—but you can turn on an LED (think of it as a tiny green light bulb!). While that might not sound impressive at first, microcontrollers can do much more, like interface with wireless devices, control motors, or gather data from various sensors—if you know how to program them.

I programmed them using C, a language that, while not my favorite, is essential for understanding the basics of embedded systems (like blinking LEDs) and scaling up to more complex ideas like real-time operations. To explain this last idea, think of a football flying at you during recess, your body must react instantly to avoid getting hit. Timing is crucial, and even a small delay could land you in the nurse’s office! Another language I learned, though a bit more technical and old-school, was Assembly, which is the closest you can get to talking directly in 1s and 0s with the computer. My time with Assembly was short, though, because soon after, I started working with Single Board Computers (SBCs), essentially small computers that run Linux.

A Spark for Computer Vision

Image 1. Ship prototype with LiDAR

For an internship project, I worked on a prototype ship that needed to model its environment using a Light Detection and Ranging sensor (LiDAR for friends), and that’s when I started programming in Python. Python was a game changer—it’s flexible, easy to learn, and has user-friendly structures that made it possible for my team and me to develop the drivers we needed. We were able to integrate the LiDAR with the SBC, and by the end of the project, the ship could plot three-dimensional point clouds, representing obstacles the LiDAR detected as it moved. Unfortunately, we didn’t have time to teach the ship to interpret those points—COVID hit, and the internship ended. But that’s when the idea of Computer Vision started to take root in my mind—the possibility of making code see the world.

After this breakthrough, you might think my journey continued smoothly—but it actually took a two-year hiatus as I shifted focus to Systems Engineering and Project Management. But that’s a story for another time. My pause ended when, in 2022, I had the chance to lead a team in developing a Computer Vision portfolio for the company I was working with. That’s when a powerful concept firmly took root in my mind: Edge AI—essentially the ability to have a pocket-sized AI.

Getting to Know Edge AI

Remember the Single Board Computer (SBC) I used for the ship project? Now, it had evolved to the point where it could “think”—well, not quite, but that’s the essence of Edge AI. Picture a smart robot that can see, hear, and make decisions. Normally, it would need to ask for a distant, powerful computer to help us decide what to do. But with Edge AI, the robot has its own small, local brain that allows it to think and react instantly, without relying on anything else. This ability to process data and make decisions directly on the device opens a world of possibilities—and that’s where my journey continued.

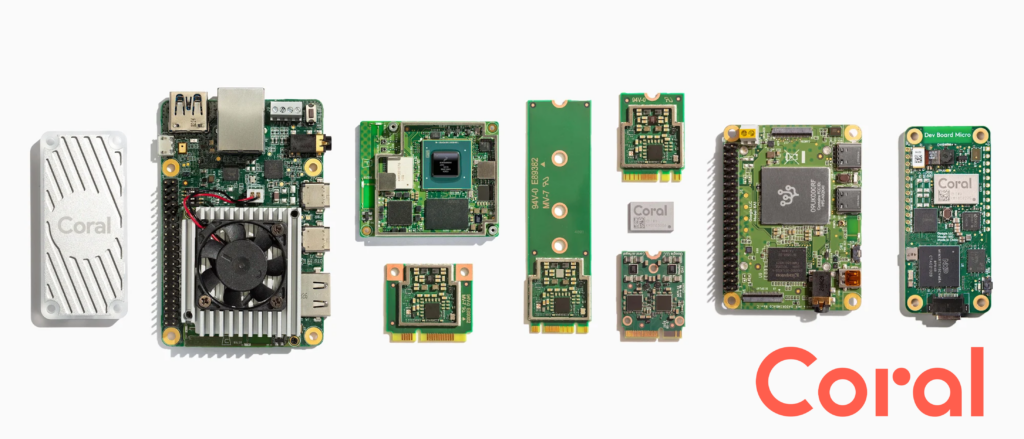

Image 2. Google Coral Machine Learning Single Board Computer

Several advanced Edge AI hardware platforms are available to support this growing field. Nvidia Jetson, for example, is a popular choice due to its robust GPU capabilities, making it ideal for tasks like computer vision and robotics (Nvidia, 2024). The Texas Instruments Edge AI Kit offers a more power-efficient option, designed for embedded systems where energy consumption is critical, such as industrial automation or smart cities (Texas Instruments, 2024). Google Coral, another cutting-edge platform, specializes in TensorFlow Lite models and excels in fast, low-power inferencing, making it perfect for applications in IoT devices (Google Coral, 2020). These platforms give developers a versatile toolkit to build AI solutions tailored to the specific needs of edge computing, balancing performance, power, and adaptability across various industries.

But Edge AI isn’t just about hardware. From a Systems Engineering perspective, deploying these solutions requires a whole ecosystem of technologies that cover the entire development life cycle. This is when I had to dive into full-stack solutions: from the backend, using Python, to the frontend, with HTML, CSS, JavaScript, and React. I also worked with both relational (SQL) and non-relational (Redis) databases, explored cloud technologies like AWS and Google Cloud, and learned about containerization with Docker. Additionally, I worked with custom embedded Linux systems optimized for real-time operations (Yocto), MQTT communication, and my personal favorite: solutions architecture and MLOps.

Let’s briefly explore each of these technologies:

Image 3. Edge AI Ecosystem Technologies

This is like the “behind-the-scenes” part of a website or app, where Python helps manage the data, run the logic, and send information to the user. It is the code that keeps things working, even though you can’t see it.

This is the part of the website or app that you see and interact with. HTML creates the structure, CSS makes it look nice, JavaScript makes it dynamic and interactive and React is a powerful tool that makes everything more efficient and easier to manage.

Think of a big spreadsheet where everything is organized in tables with rows and columns. SQL databases use this structure to store and connect data in a clear, organized way, making it easy to find and update.

These are like fast, flexible storage spaces that don’t need tables or structure like SQL databases. Redis, for example, quickly stores and retrieves data in a way that makes it great for real-time applications.

These are like renting supercomputers and data centers over the internet. Companies like AWS and Google Cloud let you store data, run apps, and access powerful tools without needing your own servers.

Imagine packing up everything an app needs to run—its code, libraries, and settings—into a container. Docker creates these containers, so the app runs the same way no matter where it’s used, making it easy to move and manage.

Yocto helps build custom versions of Linux for embedded systems, but with the added benefit of being fast and predictable, thanks to RTOS (Real-Time Operating System) optimizations. It’s like creating a special, lightweight version of Linux for devices that need to work in real-time.

MQTT is a lightweight messaging system, perfect for devices that need to talk to each other over the internet. It’s especially good for sending small bits of information quickly, like from sensors in smart homes or cars.

This is like designing a blueprint for how different parts of a technology system work together. A solutions architect makes sure all the pieces—software, hardware, data, and networks—fit together smoothly to solve a business problem.

MLOps is the process of managing and automating the journey of machine learning models, from development to deployment, ensuring they run efficiently and can be updated easily. It’s like the workflow that keeps AI systems performing at their best.

So, you can imagine that AI is just the tip of the iceberg—one that extends deep into the ocean of knowledge. And this is just in general terms; I haven’t even touched on the real-world use cases yet. I’ve had the fortune of working across different scenarios, and let me tell you, if the iceberg is deep, behind it are entire submarine cities, each representing a unique business domain. The precision required in medical applications is vastly different from the real-time demands of AI-driven cars. We can explore these topics in another post, but for now, understand that every new technology you learn brings you one step closer to building more complex Edge AI solutions.

There’s so much to learn and even more to dream about—but with just a spark of curiosity, I was able to make my code “see” the world. What could you do with that same spark? Maybe even more…

Citations

- Google Coral (2020). Build beneficial and privacy preserving AI. https://coral.ai/

- Nvidia (2024). Jetson Modules. https://developer.nvidia.com/embedded/jetson-modules

- Texas Instruments (2024). Advancing intelligence at the edge. https://www.ti.com/technologies/edge-ai.html